Video Stream Processing

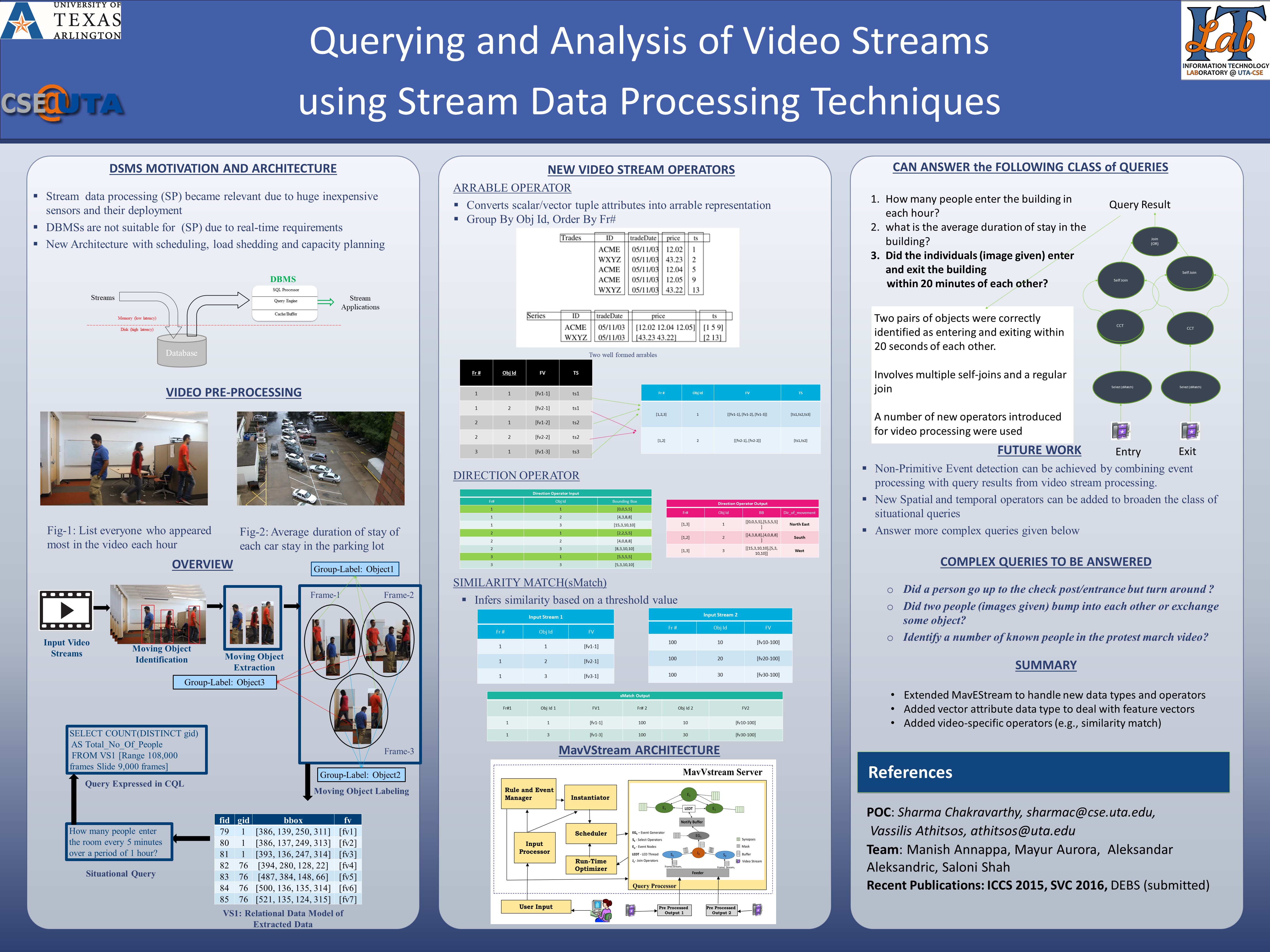

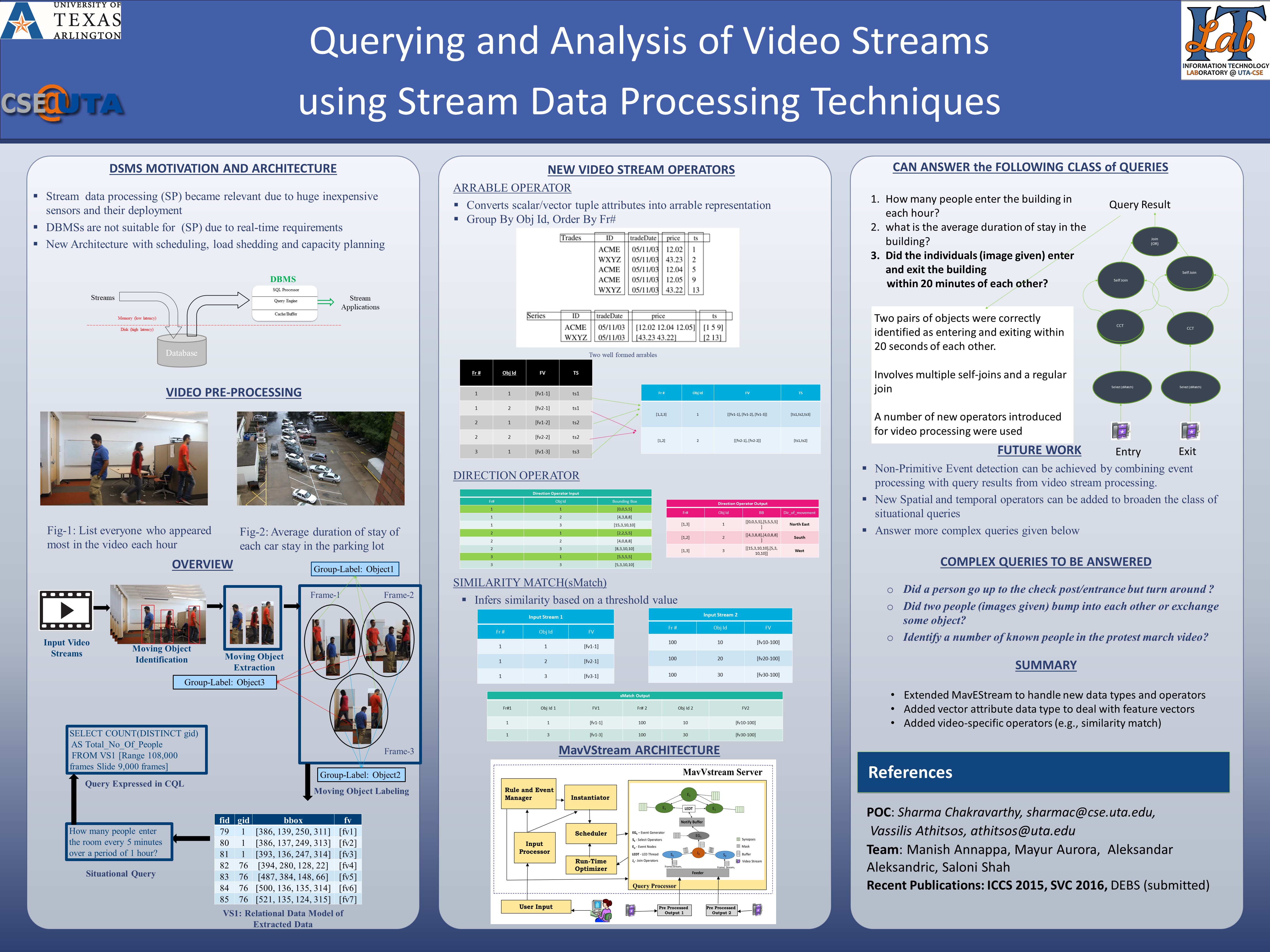

There is independent bodies of research in video/image processing and stream processing (mainly applied to sensor streams.) This project combines and extends these two approaches holistically as needed for situation monitoring video footages. This is necessary and important as the amount of video footage is increasing due to inexpensive cameras, need for surveillance, security cameras, CCTV cameras, UAVs (unmanned Aerial vehicles) etc. Manually monitoring these videos will be labor intensive (and hence expensive) and some spontaneous events which occur in fraction of second may be missed by human eyes. Moreover, keeping track of statistics to retrieve real time information is not possible manually. For example, getting information like count of people visited to the office, frequency of visit and duration of stay is nearly impossible with manual monitoring.

The goal is to go beyond current custom systems (e.g., VIRAT) that are targeted for specific situations (parking lot monitoring, personnel movement). We plan to support easy-to-write queries such as for each hour, list all persons who entered and subsequently exited a building from which the duration of stay of individuals can also be computed. Another example is list the average number of cars in a parking lot each hour for weekdays between 8am and 5pm or on weekends.

We view this project as one component of fusion which involves multi-source input analysis. Another one of our project is exploring multi-source text analysis.

We propose to use SQL type language for expressing queries and processing them with suitable optimization. Continuous queries use the notion of a window for processing unbounded streams. We propose to support general purpose continuous queries for this domain by developing, where necessary, extended/new data models, operators, and other relevant constructs. There are several stream processing systems that are used to run continuous queries on network and financial stream data. Video footage can be considered as a stream of frames with changing content. Hence the stream processing frameworks can be re-purposed to express and compute complex situations captured in video streams. We plan on extending our stream and complex event processing framework and system (MavEStream) for building an expressive video stream processing system. Our approach for this project is as follows:

- Preprocessing phase: Extract relevant information from each frame of a video footage to generate a stream that captures its contents (e.g., objects, their location in a frame, feature vector etc.) The result of this phase will yield a relational representation or an extension such as an arable. This will be used for posing continuous queries. Preprocessing of video frames will be carried out using various Image processing and Computer Vision techniques. The challenge is to identify the same object that may be present across several frames. Even for this phase, we plan on exploring continuous query processing approach to express pre-processing that may be context dependent.

- Extending the data model and identifying new operators: Current stream processing frameworks typically support relations and relational operators. However, there is a need for additional data types for capturing bounding boxes and feature vectors. New operators for identifying objects across frames (e.g., match) are needed during pre-processing. Beyond pre-processing, extensions to window concept and computations on object interaction need to be investigated.

- Optimization of continuous queries with new operators: Arrable data type (Alberto Lerner and Dennis Shasha, Aquery: Query language for ordered data, optimization techniques, and experiments, VLDB proceedings 2003) has been introduced as an extension of a relation along with new operators. This has necessitated new optimization techniques for processing queries with these operators and data types. Similarly, the extensions we propose (both for data types and operators) need to be optimized for efficient evaluation. The semantics of operators and equivalences need to be established.

- Complex event processing: Some situations may be better expressed using a combination of continuous queries that generates an interesting event (e.g., object x crossed a check post) which may be combined with other interesting events (e.g., an item was exchanged) to infer that object crossed with an item. We will explore expressiveness of situations using continuous queries as well as a combination of continuous queries and complex events.

In addition to the above, we are teaming up with an image/video processing faculty to investigate improving and extending object identification as part of pre-processing.

People

Publications

Technical Report

- Sharma Chakravarthy:

Augmenting Video Analytics Using Stream and Complex Event Processing. AFRL SUMMER FACULTY FELLOWSHIP PROGRAM (SFFP) (2017) [PDF]